This paper is intended for architects and decision-makers seeking to understand the complexities of building modern AI solutions and the value of leveraging a platform or middleware that is secure, extensible, and usable out-of-the-box (conversations, visualization). In this AI era, an abstracted notion of an operating system extends beyond managing hardware resources to encompass the orchestration of distributed computing environments, data management, and AI workflows. The paper will cover what it takes to build a robust AI operating system, from managing large language models (LLMs) and retrieval-augmented generation (RAG) and feedback loops (RLHF) to multi-tenancy, security, data ingestion, and observability. Finally, we will explore how DevRev’s AgentOS platform simplifies these challenges, allowing your teams to focus on core business processes rather than reinventing the wheel on accuracy, reliability, availability, security, and customizability . By using a comprehensive platform like AgentOS, businesses can accelerate AI adoption and innovation without being weighed down by system complexity and management.

What is an operating system?

Conventionally, an operating system has handled tasks such as scheduling and running applications, securely providing access to shared resources (“tenancy”), managing files and networks, and controlling input/output operations. It allocates resources like memory and CPU time to different programs, ensuring they don’t conflict with each other. The OS also ensures that users can interact with the computer through user-friendly interfaces, such as graphical desktops or command-line prompts, making it easier to perform tasks without needing deep technical knowledge of the underlying shared resources.

Beyond managing basic functions, modern operating systems provide essential services like networking, security, and device management. They protect data and system resources through built-in security measures, allowing multiple users and applications to safely share the same machine.

As mentioned above, an operating system performs three main functions that align with its core responsibilities. First, it manages the computer’s resources, including the CPU, memory, and peripherals like disk drives and printers, ensuring efficient allocation so multiple programs can run without conflicts. This resource management guarantees that hardware components are used optimally, preventing any one process from monopolizing the system.

Second, the OS establishes a user interface, enabling users to interact with the computer easily. Whether through a graphical user interface (GUI) like Windows and macOS, or a command-line interface (CLI) like Linux, the operating system provides a bridge between the user’s actions and the machine’s hardware, simplifying tasks such as fil management or running programs.

Lastly, the OS executes and provides services for application software, making sure that programs run smoothly by handling tasks such as loading applications into memory and coordinating the use of shared resources. It also offers essential services like file storage, networking, and error handling, ensuring that applications can focus on their specific tasks while relying on the operating system to manage underlying complexities.

Abstracting the OS

In the same way that an operating system manages a computer’s hardware and software resources, AWS (Amazon Web Services) and GCP (Google Cloud Platform) can be seen as an abstracted operating system for the cloud. Instead of controlling a single machine’s CPU, memory, and storage, AWS manages vast networks of servers, storage, and virtualized infrastructure across the globe.

Similarly, while a traditional operating system provides a user interface to simplify interactions with hardware, AWS provides easy-to-use interfaces, whether through its management console, APIs, configuration files, or command-line tools. Users ca interact with complex services like databases, networking, and storage through intuitive interfaces, which resemble how an OS allows users to interact with the applications and applications to interact with storage and networks.

AWS and GCP, much like an operating system, provide services for running and managing applications, and allow them to run at scale. Just as an OS loads applications into memory, ensures security, and handles multitasking, AWS offers cloud-native services like EC2 for virtual servers, S3 for storage, and Lambda for serverless functions. These services deliver high availability, scalability, and built in security, abstracting the complexities of infrastructure management so developers can focus on their application logic.

In essence, hyperscalers implement a generic, composable layer that addresses non functional requirements such as scalability, availability, security, and performance. By offering building blocks like compute, storage, networking, and monitoring, Cloud Operating Systems enable developers to compose solutions that meet business needs while leveraging pre-built features like auto-scaling, multi-region availability and encryption. This modularity simplifies achieving reliability

efficiency, and security across applications allowing developers to focus on core business needs.

What does it take to build an AI engine?

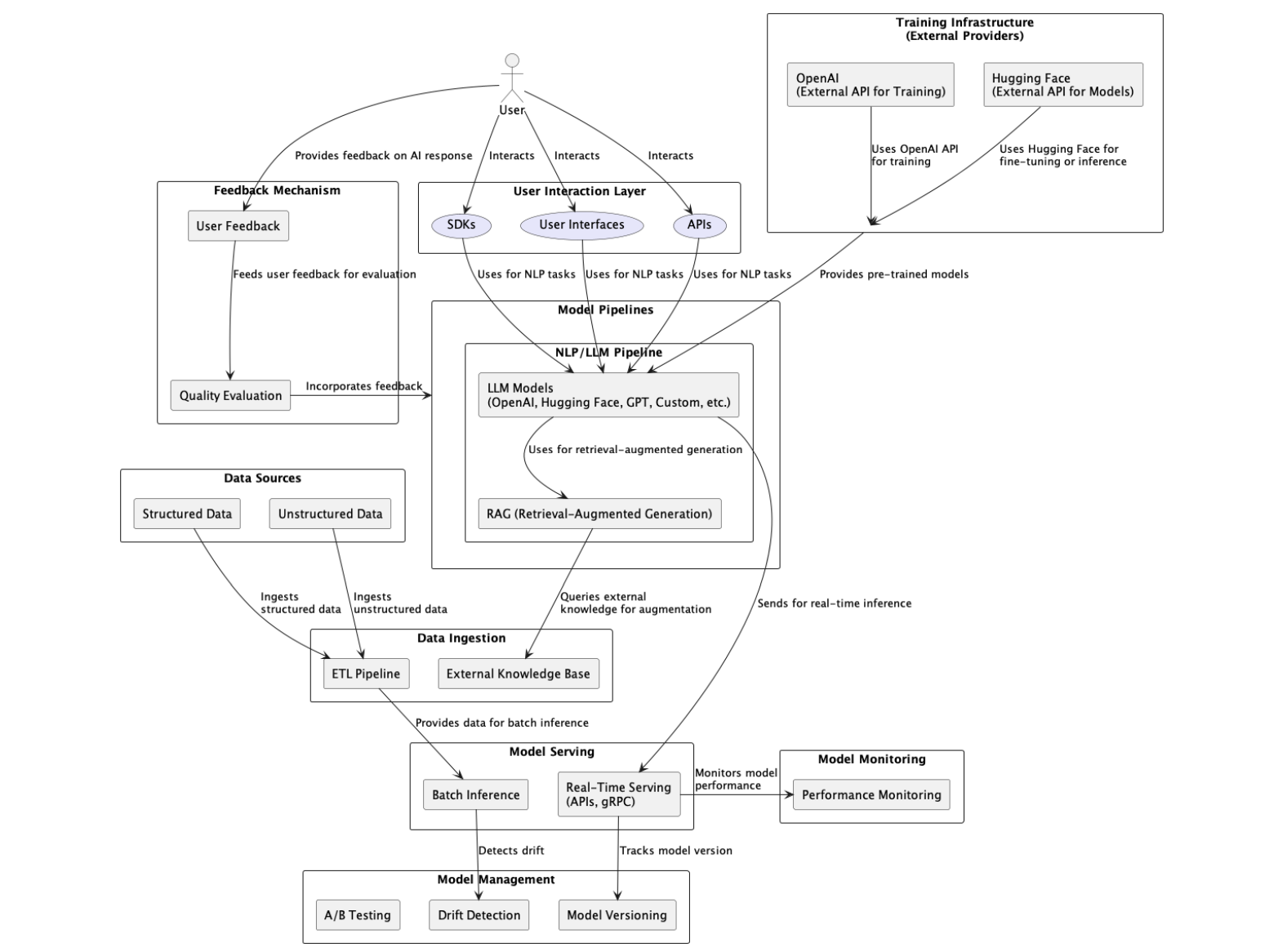

Fig 1. A multi-layered AI engine

Fig 1. A multi-layered AI engine

Building a modern AI solution that leverages large language models (LLMs) and other AI topologies requires a well-designed architecture focused on scalability, performance, security, and flexibility along with specific AI needs such as vector database and feedback loops. These architectures must integrate various components that work together to provide robust capabilities across data processing, model training, and deployment.

Key elements of the system include data ingestion pipelines that handle both structured and unstructured data, enabling real-time and batch processing. Distributed infrastructure, such as GPU/TPU clusters, is essential for training and fine-tuning models efficiently, when required. Utilizing pre trained models from providers like OpenAI or HuggingFace, combined with transfer learning, can significantly accelerate development. Additionally, integrating techniques like RAG and feedback loops (such as RLHF) can improve model outputs by combining generation with external data retrieval.

For effective deployment, the solution should support real-time inference through APIs or gRPC for low-latency tasks, along with batch inference for large-scale analytics. Auto scaling and caching mechanisms are crucial for optimizing performance, while tools for model versioning, drift detection, and A/B testing ensure ongoing monitoring and iterative improvements. Incorporating user feedback into the system allows continuous refinement of AI models, while adherence to security and privacy regulations ensures trust and operational stability.

Consider the simplified component diagram for an example AI solution in the following page. It barely contains non-functional requirements such as security, scalability, and audit but provides a generic blueprint for the typical system. While a cloud operating system, like AWS or GCP, provides a broad range of services that could be used for building AI solutions, an architecture that would implement the diagram, incorporating LLMs, RAG, external training providers, and fine-tuning APIs, would be challenging to implement on AWS alone. This complexity arises from several limitations in standard Cloud OS offerings, particularly in the areas of model training, external model integration, multi-tenancy and the variety of services needed for specialized workflows.

Fig 2. Simplified schematic diagram for an example solution

Fig 2. Simplified schematic diagram for an example solution

Building such an architecture solely on AWS or GCP infrastructure would face limitations due to the need for external providers, such as OpenAI and HuggingFace, which offer specialized pre-trained models and fine-tuning capabilities beyond AWS’s built-in A services like SageMaker. Integrating these external providers requires extensive glue code to handle API communication, authentication, data flow management an synchronized scaling. Additionally, the use of RAG demands integration with external knowledge bases and vector databases, which may not always be hosted on AWS. Managing these queries in real-time adds complexity and cost, requiring middleware to synchronize data retrieval, handle latency, and manage different data sources. Cross-platform scalability also presents a challenge, as AWS’s native auto-scaling features don’t easily extend to external APIs or platforms. This would necessitate custom code to ensure consistent performance and load management across multiple providers. Furthermore, model and data management, especially when involving external models, requires additional orchestration to synchronize versioning, updates, and feedback loops. Lastly, security and compliance become more complicated when handling sensitive data across multiple vendors, requiring custom solutions to maintain consistent security policies and data protection across platforms.

In summary, such architecture would require significant glue code and system integration beyond what a cloud vendor such as AWS or GCP alone can offer. It involves handling complex data flows between different vendors, managing cross-platform scalability, and building custom security and performance monitoring layers. These challenges necessitate a comprehensive middleware layer, custom orchestration scripts, and extensive monitoring to ensure the system runs reliably across the different cloud platforms and service providers involved.

DevRev’s AgentOS

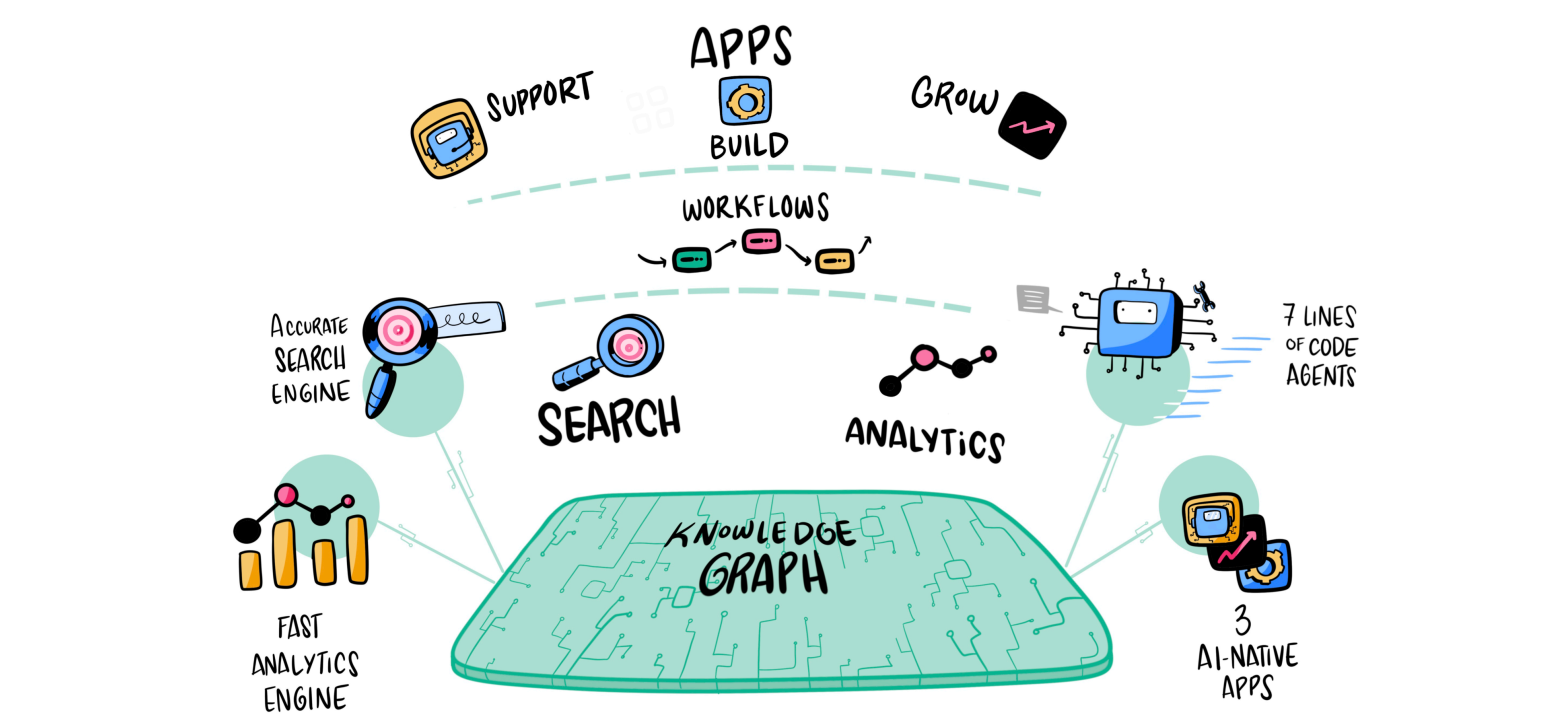

Given the complexities involved in building and managing a custom AI engine, as we covered in the section above, DevRev’s AgentOS Platform can significantly simplify the process by functioning as an AI operating system. Just like a traditional operating system manages computing resources, establishes user interfaces, and executes application software, DevRev AgentOS manages the AI ecosystem by abstracting and handling complex resources, providing versatile user interfaces, both graphical and programmatic, and executing intelligent AI agents.

A key component of this is the Knowledge Graph (KG), which acts as a dynamic context engine. Rather than merely fetching static information, it synthesizes real-time data from various structured and unstructured sources to provide AI agents with dynamic, situational awareness. The knowledge graph encapsulates customer, products, services, employee work, and user session data in an interlinked fashion.

Fig 3. Real-time data in the knowledge graph

Fig 3. Real-time data in the knowledge graph

This allows AI agents to make more informed decisions by understanding user requests and merging data across interconnected relationships. In this way, the platform not only simplifies the development, deployment, and scaling of AI-driven applications and workflows but also enhances the decision making capabilities of AI agents through enriched context and deeper insights.

The Data “River”

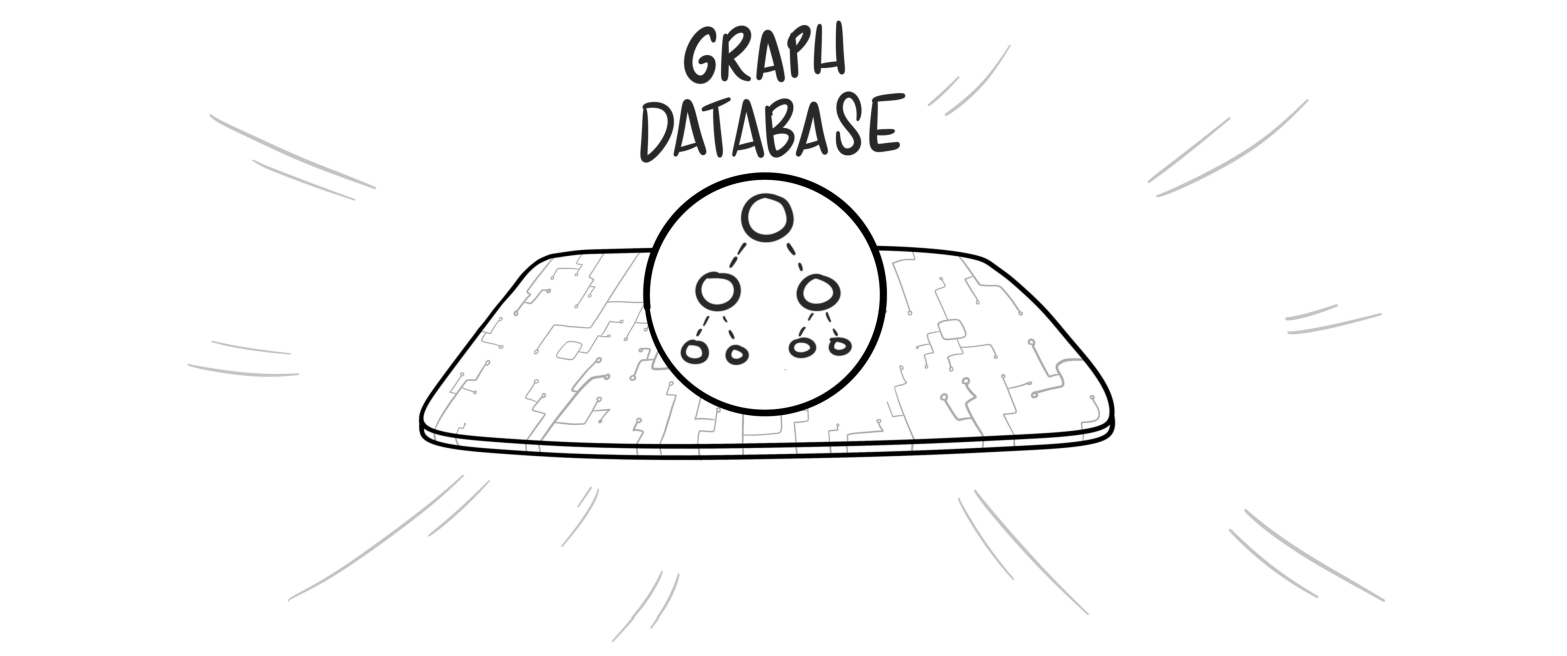

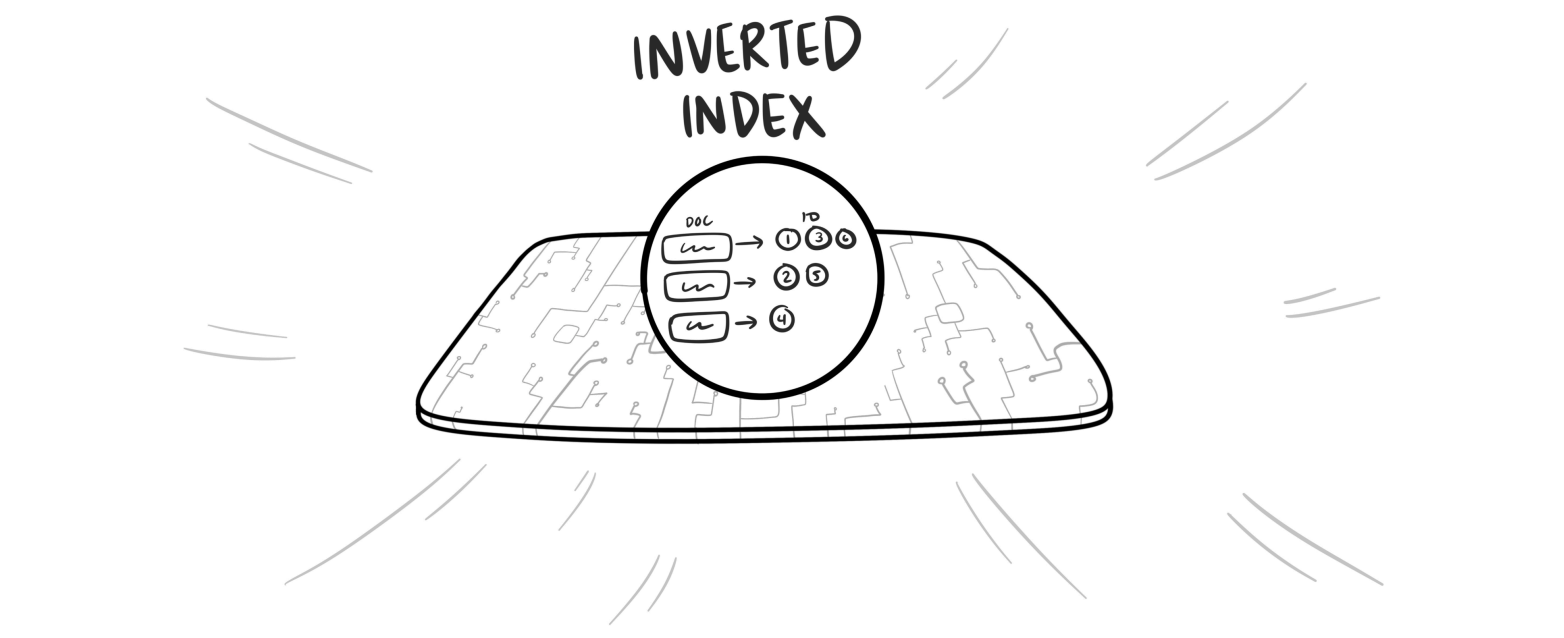

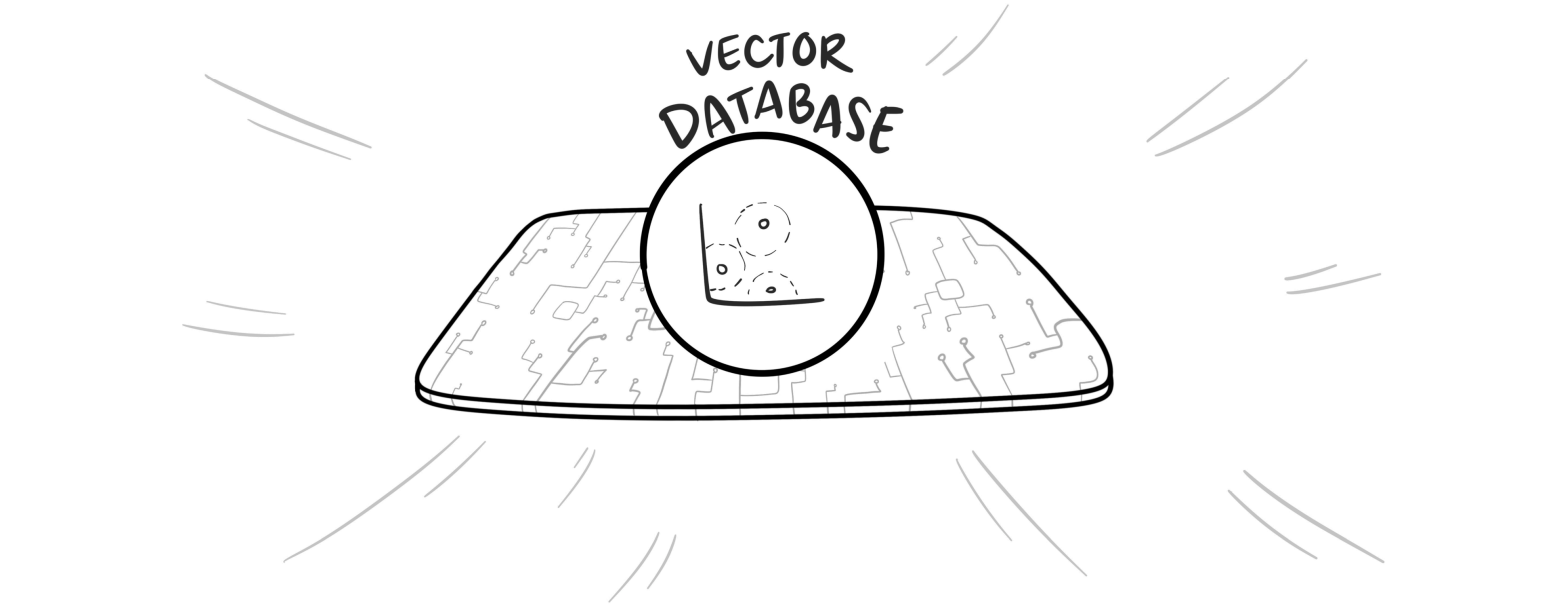

The knowledge graph for DevRev is not a single data representation, but five (5) such on-disk data structures that are hydrated upstream via the company’s 2-way sync replication technology called “Airdrop”, then flow downstream to a syntactic search engine a semantic search engine, a data warehouse, and an edge-based in-memory time-series database for real-time visualizations.

Fig 4. An upstream graph database with entities and relationships

Fig 4. An upstream graph database with entities and relationships

Fig 5. An inverted index for keyword-based (syntactic) search

Fig 5. An inverted index for keyword-based (syntactic) search

Fig 6. A small-language model (SLM) powered embeddings database for semantic search

Fig 6. A small-language model (SLM) powered embeddings database for semantic search

Fig 7. A large data warehouse midstream for batch jobs, RLHF analytics, and SQL queries

Fig 7. A large data warehouse midstream for batch jobs, RLHF analytics, and SQL queries

Fig 8. A downstream in-memory database for edge-based visualization and text-2-SQL analytics

Fig 8. A downstream in-memory database for edge-based visualization and text-2-SQL analytics

Foundational Services

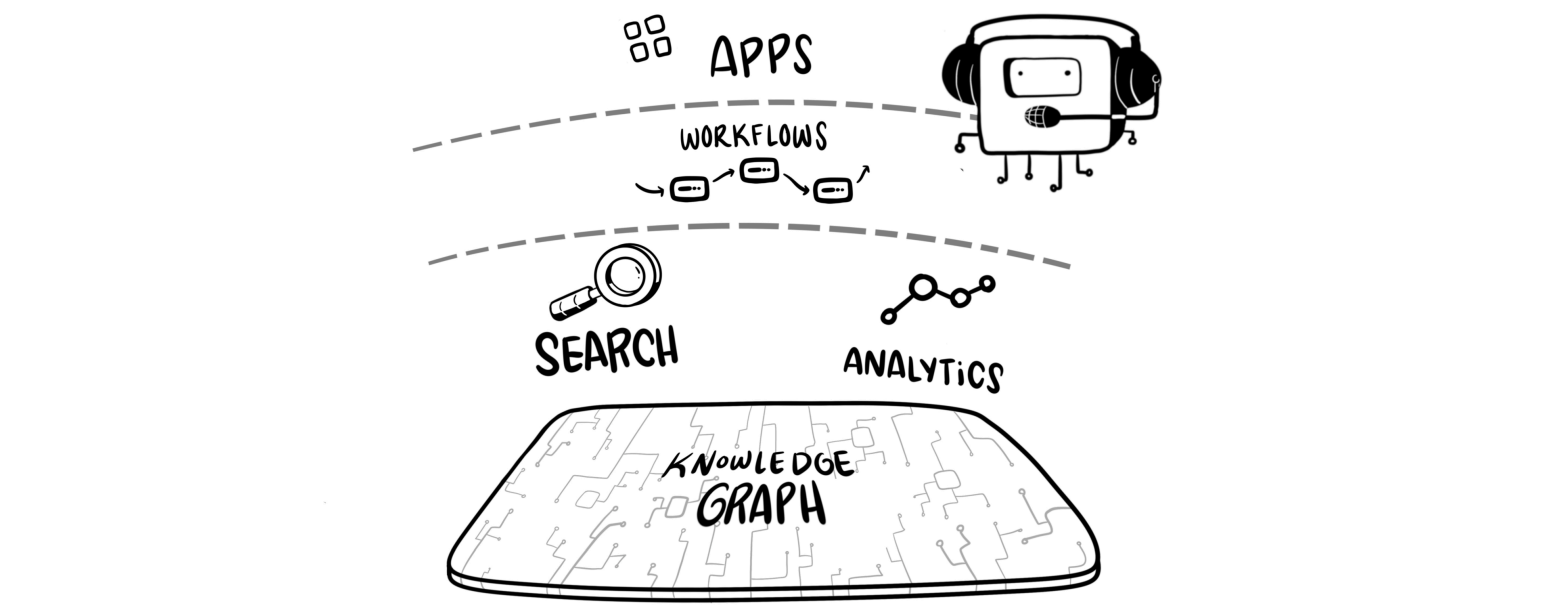

Once the knowledge graph has been expressed as these data structures, the AgentOS is ready to provide a trifecta of services, which otherwise would take years to build and maintain in an enterprise, namely: search, analytics, and workflows. They require (a) 2-way synchronization with existing systems, (b) authorization, (c) Cmd K like ease of use, (d) extensible plug-in framework for crawl and summarization jobs, and (e) a compute framework for knowledge augmentation and reconciliation.

Fig 9. Three engines — search, analytics, and workflow — layered on top of an extensible knowledge graph

Fig 9. Three engines — search, analytics, and workflow — layered on top of an extensible knowledge graph

DevRev AgentOS also manages the resources of the AI engine, abstracting the complexity of ingesting data into a contextual layer (the KG), extended compute that can merge determinism with semantic reasoning, and scaling the various related services that have to work together to deliver the AI experience. Whether it’s executing Lambda functions, managing in-memory databases, or orchestrating distributed multi-tenant storage across different types of databases like columnar, relational, and vector, DevRev ensures that all the underlying infrastructure is handled and scaled seamlessly. The system can be extended with snap-ins, which are code packages running in a managed environment in response to events. This means AI builders no longer need to worry about infrastructure concerns like maintaining a service, scaling it or managing the storage layer. DevRev integrates and handles multiple backends like search (Wisp), vector embeddings (Honeycomb), change data capture (CDC), and Google BigQuery (Serengeti), simplifying the task of managing and retrieving data for AI applications.

Moreover, the platform provides an intuitive and scalable user interface that caters to both technical builders and end-users. It supports web, mobile, and API-based interactions along with a full set of webhooks that are powered by our change data capture module and full support for object customization and subtypes, making it highly customizable for different kinds of users and their different needs. Whether it’s defining, testing, and deploying AI agents through graphical or programmatic interfaces, managing complex AI-aware workflows via webhooks and event-driven models, or end users wanting t consume the AI responses and rate their usefulness for analytics and automatic learning. Developers can build workflows tha allow AI agents to automate tasks such as classifying documents, tagging objects, or mining the knowledge graph for specific use cases all in human language. These agents can interact with multiple data sources via DevRev’s ability to air-drop data into the knowledge graph, synchronizing changes across downstream data structures using change data capture streams as the backbone.

Finally, scheduling and executing AI agents through DevRev becomes a streamlined process that more closely resembles intent understanding than function calls, with additional built-in services such as authentication, authorization, scheduling, monitoring, recording, classification, clustering, deflection, deduplication, an security features baked into the platform. The advanced security model provides multi factor authentication and fine-grained authorization across all objects, ensuring that AI agents interact securely with data and applications. DevRev’s SQL notebooks, LangChain infrastructure, and command framework further enable users to create, inspect, experiment, and refine AI workflow easily. AI agents can be enabled to self-learn and adapt to the data they shape in real-time, feeding into downstream systems, evolving based on the latest insights and agent interaction, and offering enhanced decision making for end-users. In essence, DevRev allows these AI agents to operate across layers and applications, from customer support to document processing, all within a managed, scalable, and secure environment.

Conclusion

DevRev AgentOS simplifies the implementation of critical non-functional requirements (NFRs) for AI systems, allowing users to focus on business needs rather than AI infrastructure. The platform handles knowledge graph management, enabling organizations to build and maintain interconnected data structures that power AI insights. Features like Airdrop allow easy data sync from various sources, streamlining integration. DevRev also supports multi tenancy,enabling secure, isolated management of data, models, and configurations across teams and customer while maintaining shared, scalable infrastructure.

The platform also integrates sophisticated AI modules, such as a customizable RAG engine and pluggable large language models (LLMs), allowing users to enhance AI outputs with real-time data retrieval and easily swap between different pre-trained models without needing to overhaul the entire system. DevRev supports per-customer configurations, enabling personalized A behavior and responses, crucial for delivering customized solutions. Additionally, it provides a workflow engine that allows users t seamlessly combine deterministic processes with semantic, non-deterministic AI-driven and semantic decision-making nodes, offering state-of-the-art flexibility in automation which can easily extend beyond DevRev’s boundaries by supporting custom code and API invocations. AI can even be used to construct and parse API payloads, enabling loose coupling to specific versions of external systems. The platform also allows customers’ end users to directly interact with the AI through multiple surfaces, making it viable for both internal and external users. With built-in observability capabilities, AI solutions can be monitored and scaled with ease. The platform also enforces strong security measures, such as multi-factor authentication (MFA) and fine-grained authorization (MFZ) ensuring that data and resources are securely managed. Support for object customization and more ensures that users can tailor the platform to their specific needs, allowing them to focus on leveraging AI to drive business outcomes rather than dealing with the technical boilerplate.

DevRev AgentOS addresses the most challenging aspects of building an AI engine by managing complex infrastructure, providing versatile and easy to use user interfaces, and executing AI agents in a secure and scalable manner. It abstracts the technical complexity of data synchronization, real-time processing and multi-tenancy, enabling AI builders to focus on high-value tasks like improving AI powered business logic rather than worrying about infrastructure. Whether it’s leveraging tools like vector databases or managing custom workflows through snap-ins and webhooks

DevRev makes it easier to build, scale, maintain, and serve AI-driven systems across any enterprise — helping bring three critical engines, namely: search, analytics, and workflows, out of the box for any enterprise to build AI apps on top of.